[Update 9 December 2014]

Summaries online

The summaries of the Climate Dialogue on Climate Sensitivity and Transient Climate response are now online (see links below). We have made two versions: an extended and a shorter version.

Both versions can be downloaded as pdf documents:

Summary of the climate dialogue on climate sensitivity

Extended summary of the climate dialogue on climate sensitivity

[End update]

Climate sensitivity is at the heart of the scientific debate on anthropogenic climate change. In the fifth assessment report of the IPCC (AR5) the different lines of evidence were combined to conclude that the Equilibrium Climate Sensitivity (ECS) is likely in the range from 1.5°C to 4.5°C. Unfortunately this range has not narrowed since the first assessment report in 1990.

An important discussion is what the pros and cons are of the various methods and studies and how these should be weighed to arrive at a particular range and a ‘best estimate’. The latter was not given in AR5 because of “a lack of agreement on values across assessed lines of evidence”. Studies based on observations from the instrumental period (1850-2014) generally arrive at moderate values for ECS (and that led to a decrease of the lower bound for the likely range of climate sensitivity from 2°C in AR4 to 1.5°C in AR5). Climate models, climate change in the distant past (palaeo records) and climatological constraints generally result in (much) higher estimates for ECS.

A similar discussion applies to the Transient Climate Response (TCR) which is thought to be more policy relevant than ECS.

We are very pleased that the following three well-known contributors to the general debate on climate sensitivity have agreed to participate in this Climate Dialogue: James Annan, John Fasullo and Nic Lewis.

The introduction and guest posts can be read online below. For convenience we also provide pdf’s:

Introduction climate sensitivity and transient climate response

Guest blog James Annan

Guest blog John Fasullo

Guest blog Nic Lewis

To view the dialogue of James Annan, John Fasullo, and Nic Lewis following these blogs click here.

Climate Dialogue editorial staff

Bart Strengers, PBL

Marcel Crok, science writer

First comments on the guest blog of James Annan:

I enjoyed reading James Annan’s guest blog on climate sensitivity. There is much in his post that I agree with and I found his discussion of nonlinearities in the paleoclimate record to be particularly interesting. I also agree with his characterization of our recent work (Fasullo and Trenberth 2012) as being primarily qualitative in nature. Given the likelihood that the CMIP archives do not span the full range of parametric and structural uncertainties, it seems unlikely that a more quantitative assessment would have been justified. It is clear however, from both our work and the work of others, that various GCMs have particular difficulty in simulating even the basic features of observed variability in both clouds and radiation. Given the importance of related processes in driving the inter-model spread in sensitivity we viewed this as a sound basis for discounting such models, which as it turns out were the only models in CMIP3 with ECS below 2.7. These models were also amongst the oldest in the archive and had been shown in other work to be lacking in key respects. As discussed below, this may present an opportunity for narrowing the GCM-based range of sensitivity.

I also agree with James’ point that an adequate estimation of uncertainty has been lacking generally, though I tend to view the problem of underestimation to be more common than that of overestimation. I think this challenge speaks directly to the question posed by the editors in their introduction as to why AR5 did not choose between the different lines of evidence in forming a single “best estimate”. Doing so would have required a firmer understanding of the uncertainties inherent to each approach than is presently available. Improved assessment of these uncertainties exists as a high priority in my view and one that is achievable in the not-so-distant future.

Lastly, while James makes a good point that there is not necessarily a contradiction or tension between the various approaches if different lines of evidence provide different ranges, it is here that I have reservations. Does this necessarily mean that the likely value in nature lies at the intersection of available ranges and does this also mean that the approaches should be given equal weight? In my view, given the issues regarding uncertainty mentioned above, the answer to both of these questions is likely to be “no”. Potential improvements in so-called “20th Century” approaches include a more thorough consideration of the adequacy of any “prior”, given the rich internal variability of the climate system, and the uncertainty in both forcings and their efficacy. There is also a need to more fully consider the sensitivity of any method to observations, particularly when using ocean heat content. As we show in a paper earlier this year, the choice of an ocean heat content dataset can change the conclusions of such an analysis from being a critique of the IPCC range to being consistent with it.

For paleoclimate-based estimates, as James points out, sensitivity to nonlinearities, data problems, and uncertainty in forcing undermine any strong constraint on ECS and it is unclear (to me at least) whether progress on these fronts presents an immediate opportunity for reducing uncertainty in ECS in the near future. Lastly, I view estimates involving GCMs to be somewhat of a mixed bag. Clearly, some GCMs can be discounted based on their inability to simulate key aspects of observed climate, as discussed above. One would be hard-pressed to argue that the NCAR PCM1 and NCAR CESM1-CAM5 should be given equal weighting in estimating sensitivity. Weighting or culling model archives based on various physically-based rationales is likely to play a key role in constraining GCM estimates of sensitivity in the near future. A major, apparently unavoidable, question for this approach however is whether existing model archives sample the full range of parametric and structural uncertainty in the processes that determine sensitivity.

First comments on the guest blog of James Annan:

May I start by thanking James Annan for taking part in this discussion of climate sensitivity at Climate Dialogue. I am sure that this will be an interesting debate.

I largely agree with most of what James says about PDFs for climate sensitivity, although we have somewhat different approaches to Bayesian methodology. One point I would make is that where the PDFs have different shapes and not merely different widths, one may be more influential at low sensitivities and the other at high sensitivities. Estimated PDFs for ECS from instrumental period studies are generally both narrower and much more skewed (with long upper tails) than those from paleoclimate studies. When the evidence represented in these two types of PDFs is combined, in general the instrumental period PDF will largely determine the lower bound of the resulting uncertainty range but the paleoclimate PDF may have a significant influence on its upper bound. That is because, in general, whichever of the PDFs is varying more rapidly at a particular ECS level will have more influence on the combined studies’ PDF at that point. Instrumental study PDFs for ECS generally have a sharp decline at low ECS values but, with their long upper tails, a very slow decline at high ECS values.

I think that the reasons AR5 downweights various approaches differs between them. For paleoclimate studies, on my reading the AR5 scientists took the view that the uncertainties were generally underestimated, not only because of the difficulty in estimating changes in forcing and temperature but also, importantly, because climate sensitivity in the current state of the climate system might be significantly different from that when it was in substantially different states. There, widening the uncertainty range (effectively flattening the PDF) seems reasonable. For studies involving short term changes, some of which have non-overlapping uncertainty ranges, the concern seems to me more that it is unclear whether the estimates they arrive at really represent ECS, or something different. The case for simply disregarding all such estimates as unreliable is stronger there. The concern is more with the merits of such approaches than with individual studies.

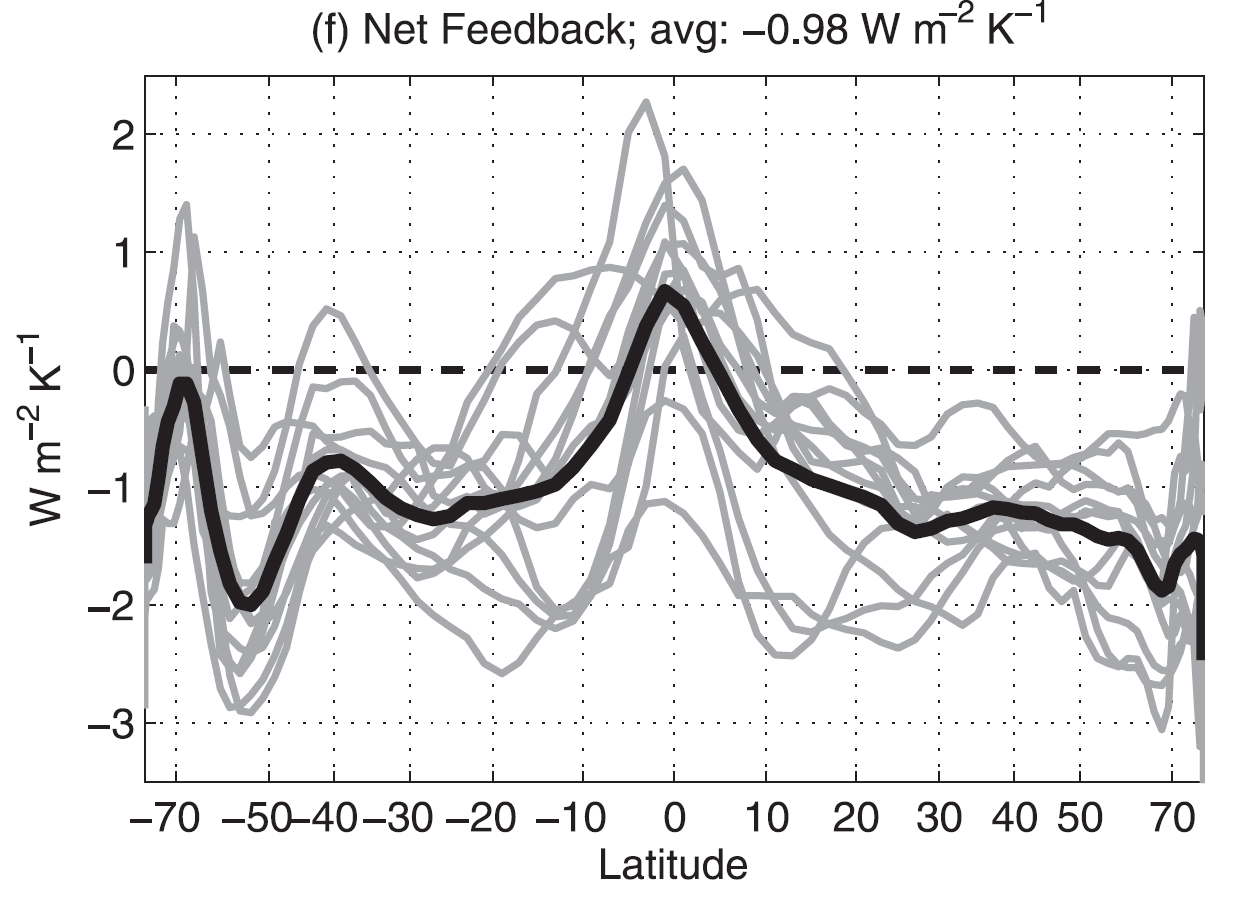

I agree with James’ observation that the transient pattern of warming is likely to be a little different from the equilibrium result, which may result in the ECS estimates from instrumental period warming studies involving only global or hemispherically-resolving models (which usually represent effective climate sensitivity) differing a little from equilibrium climate sensitivity. However, the Armour et al (2013) paper that James cited in that connection was based on a particular GCM that has a latitudinal pattern of climate feedbacks very different from that of most GCMs.

James and I seem to have similar views as to studies based on ensembles of simulations involving varying the parameters of a GCM being of little use. And whilst it is interesting that climate models with higher sensitivities may be better at simulating certain aspects of the climate system than others, it does not follow that their sensitivities must be realistic.

Regarding paleoclimate study ECS estimates, I concur with the conclusions reached in AR5. So, overall, this line of evidence indicates that there is only about a 10% probability of ECS being below 1°C and a 10% chance of it being above 6°C. I think the uncertainties are simply too great to support the narrower ~2–4.5°C range mentioned by James, and I wouldn’t support using that narrower range as a prior in a Bayesian analysis.

Reference

Armour, K. C., Bitz, C. M., & Roe, G. H. (2013). Time-Varying Climate Sensitivity from Regional Feedbacks. Journal of Climate, 26, 4518–4534. doi:10.1175/JCLI-D-12-00544.1

First comments on the guest blog of John Fasullo:

John Fasullo focusses on the change lower limit of the IPCC AR5 “likely” range from 2 (in AR4) to 1.5 (in AR5), arguing that although it was understandable, it was wrong on the basis that models can reproduce periods of little warming. While I don’t presume to know what was going on in the IPCC authors’ esteemed minds, I believe it’s far preferable to consider the climate sensitivity estimation on the merits of the available literature rather than considering the previous IPCC AR4 estimate (and/or the GCM model range) as some sort of prior or null hypothesis to only be changed if and when the observational data become overwhelming. Whether we can still argue that the recent observed global mean temperature time series is consistent with the GCM ensemble (at some arbitrary level of confidence) is rather beside the point. The observed time series is indisputably close to the lower end of the range, and any reasonable estimate had better take that into account.

Some recent estimates, like Stott et al (2013), look beyond the global or hemispheric mean temperature change, and consider the full spatial pattern of response to different forcings. Fasullo’s arguments don’t appear to apply to this sort of detection and attribution approach at all.

Reference

Stott, P., Good, P., Jones, G., Gillett, N., & Hawkins, E. (2013). The upper end of climate model temperature projections is inconsistent with past warming. Environmental Research Letters, 8(1), 014024. doi:10.1088/1748-9326/8/1/014024

First comments on the guest blog of John Fasullo:

May I start by thanking John Fasullo for taking part in this discussion of climate sensitivity at Climate Dialogue. I can see from the title of his guest blog that we are in for an interesting debate.

I have just a few comments on John’s opening section The Challenge. In relation to climatological constraint approaches, my analysis – summarised in my guest blog – of the Sexton et al (2011) and Harris et al (2013) studies featured in AR5 (only the TCR estimate from the latter being shown) establishes that perturbing GCM parameters does not provide a valid way to estimate ECS, at least for the HadCM3/SM3 model that has been widely used for this purpose. I note that James Annan no longer considers such methods to be of much use.

Whether use of CMIP ensembles and ‘emergent constraints’ will provide much of a constraint on climate sensitivity is an open question. At present, supposed ‘emergent constraints’ seem primarily to tell one which models are good or bad at various things. For instance, Cai et al (2014) showed that 20 out of 40 CMIP3 and CMIP5 models are able to reproduce the high rainfall skewness and high rainfall over the Nino3 region, whilst Sherwood et al (2014) shows that 7 CMIP3 and CMIP5 models (5 of which were included in Cai’s analysis) have a lower-tropospheric mixing index falling within the observational (primarily model reanalyses, in fact) uncertainty ranges – and that those models have high climate sensitivities. Unfortunately, no model satisfies both Cai’s and Sherwood’s tests. A logical conclusion is that at present models are not good enough to rely on the climate sensitivities of any of them.

John says that to some extent the distinctions between ECS estimation methods are artificial. But although there are elements in common, there are fundamental differences. As he says, all GCMs have used the instrumental record to select model parameter values that produce plausible climates. However, as an experienced team of climate modellers has written (Forest, Stone & Sokolov, 2008), many combinations of model parameters can produce good simulations of the current climate but substantially different climate sensitivities. Whilst observations inform model development, the resulting model ECS values are only weakly constrained by those observations.

By contrast, in a properly designed observationally-based study, the best estimate for ECS is completely determined by the actual observations, as is normal in scientific experiments. To the extent that the model, simple or complex, used to relate those observations to ECS is inaccurate, or the observations themselves are, then so will the ECS estimate be. But, in any event, the ECS estimate will be far more closely related to observations than are GCM ECS values.

Moving on to the need for physical understanding, I certainly agree about the desirability of a physically-based perspective. However, I fear that the climate system may be too complex and current understanding of it too incomplete for strong constraints on ECS or TCR to be achieved in the near future from just narrowing constraints on individual feedbacks. Certainly, I doubt that we are close to that point yet. Attempts have been made to constrain cloud feedback, where uncertainties are greatest, from observable aspects of present-day clouds. But AR5 (Section 7.2.5.7) judges these a failure to date, concluding that “there is no evidence of a robust link between any of the noted observables and the global feedback”.

At present, there seems little doubt that energy-budget based approaches are the most robust way of estimating ECS and TCR. They involve a very simple physical model – directly based on conservation of energy – with relatively few assumptions, and they in effect measure the overall feedback of the climate system using the longest and least uncertain observational records available, of surface temperatures. Their main drawback is the large uncertainty as to changes in total radiative forcing, resulting principally from uncertainty in aerosol forcing.

Better constraining aerosol forcing is the key to narrowing uncertainty in all ECS and TCR estimates based on observed multidecadal warming during the instrumental period, not only energy budget estimates. But it is encouraging that all instrumental period warming based observational studies that have no evident serious flaws now arrive at much the same ECS estimates, in the 1.5–2.0°C range. As well as simple energy budget approaches using the AR5 best estimates for aerosol and other forcings, that includes several studies which form their own estimates of aerosol forcing using suitable data – more than just global temperature – and relatively simple (but hemispherically-resolving) or intermediate complexity models.

My readings of the conclusions in Chapters 10 and 12 of AR5 WG1 is that the scientists involved shared my view that higher confidence should be placed on studies based on warming over the instrumental period than on other observational approaches.

John raises the issue of varying definitions of ECS. IPCC assessment reports treat equilibrium climate sensitivity as relating to the response of global mean surface temperature (GMST) to a doubling of atmospheric CO₂ concentration once the atmosphere and ocean have reached equilibrium, but without allowing for slow adjustments by such components as ice sheets and vegetation. The term Earth system sensitivity (ESS) is used for the equilibrium response taking into account such adjustments.

In practice, what many observationally-based studies estimate is Effective climate sensitivity, a measure of the strengths of climate feedbacks at a particular time, evaluated from model output or observations for evolving non-equilibrium conditions. Effective climate sensitivity does take changes in both the upper and the deep ocean into account, as well as changes in the cryosphere other than ice sheets, but in some GCMs it is a bit lower than equilibrium climate sensitivity. AR5 concludes (Section 12.5.3) that the climate sensitivity measuring the climate feedbacks of the Earth system today “may be slightly different from the sensitivity of the Earth in a much warmer state on time scales of millennia”. But the terms effective climate sensitivity and equilibrium climate sensitivity are largely used synonymously in AR5. From a practical point of view, changes over the next century will in any event be more closely related to TCR than to either variant of ECS, let alone to ESS.

John raises the difficulty of finding the appropriate statistical “prior” for the free parameters of a model. That is far more of a problem with a GCM than with a simple model, because of the much higher dimensionality of the parameter space – a GCM has hundreds of parameters. Even assuming some combinations of parameter values will produce a realistic simulation of the climate, that may be a tiny and almost impossible-to-find subset of possible parameter combinations. The number of degrees of freedom available in relevant observations is limited, bearing in mind the high spatiotemporal correlations in the climate system and the large uncertainty in most observations (from internal variability as well as measurement error). It is therefore more practicable to constrain a smaller number of parameters using observations.

Where the intent is to allow the observations alone to inform parameter estimation (objective estimation, as is usual for scientific experiments), there are well established methods of finding the appropriate statistical prior. See Jewson, Rowlands and Allen (2009) for how to apply these in the context of a climate model. Or non-Bayesian methods such as modified or simple profile likelihood, which do not involve explicitly selecting a prior, can be used. Incorporating subjective beliefs or other non-observational information about parameter values is more complex. Doing so may also not be wise. A parameter value thought to be physically unlikely may be necessary in order to compensate for an erroneous or incomplete representation of the climate process(es) involved.

Hiatus

I believe John’s views of the impact of “hiatus” in global surface warming over the last circa 15 years on estimation of ECS are seriously mistaken. He starts by claiming that, based on simple models, the hypothesis that the hiatus argues for a reduction in the lower bound of the range for ECS was found sufficiently compelling that IPCC AR5 reduced the lower bound of its likely range for ECS. John cites Chapter 12 in that regard. But Box 12.2, which covers equilibrium climate sensitivity and transient climate response, does not even mention the slowdown in warming this century. It says (my emphasis):

Based on the combined evidence from observed climate change including the observed 20th century warming, climate models, feed¬back analysis and paleoclimate, ECS is likely in the range 1.5°C to 4.5°C with high confidence.

and goes on to say:

“The lower limit of the likely range of 1.5°C is less than the lower limit of 2°C in AR4. This change reflects the evidence from new studies of observed temperature change, using the extended records in atmosphere and ocean. These studies suggest a best fit to the observed surface and ocean warming for ECS values in the lower part of the likely range.”

John’s argument that observationally-based estimates pointing to ECS being lower than previous consensus estimates are strongly influenced by the hiatus seems quite widespread. A recent peer-reviewed paper (Rogelj et al, 2014) cites in that connection four studies, including the only three instrumental-period warming based observational ECS estimates featured in Figure 1 of Box 12.2 that I conclude are sound. It first discusses the old AR4 2–4.5°C likely range for ECS, saying:

“Some newer studies have confirmed that range (Andrews et al 2012, Rohling et al 2012), but others have raised the possibility that ECS may be either lower (Schmittner et al 2011, Aldrin et al 2012, Lewis 2013, Otto et al 2013) or higher (Fasullo and Trenberth 2012, Sherwood et al 2014) than previously thought.”

Rogelj et al then conclude (my emphasis):

“A critical look at the various lines of evidence shows that those pointing to the lower end are sensitive to the particular realization of natural climate variability (Huber et al 2014). As a consequence, their results are strongly influenced by the low increase in observed warming during the past decade (about 0.05 C/decade in the 1998–2012 period compared to about 0.12 C/decade from 1951 to 2012, see IPCC 2013)… ”

It is clear from the context that the claim that “their results are strongly influenced by the low increase in observed warming during the past decade” refers back to the results of the Schmittner et al 2011, Aldrin et al 2012, Lewis 2013 and Otto et al 2013 studies. But the claim is completely incorrect in relation to all four studies:

• Schmittner et al 2011 estimated ECS from temperature reconstructions of the Last Glacial Maximum.

• Aldrin et al 2012 used data ending in 2007 for its main results ECS estimate but also presented an alternative estimate based on data ending in 2000. The median ECS estimate using data only up to 2000 was lower, not higher, than the main one using data to 2007. Moreover, their updated ECS estimate using data up to 2010, published in Figure 10.20b of AR5, had a higher median than that using data to 2007.

• The Otto et al 2013 median ECS estimate using 2000s data was the highest of all its ECS estimates; the ECS estimates using data from the 1970s, 1980s or 1990s were all lower.

• Lewis 2013 used data ending in August 2001.

Evidence for climate sensitivity being lower than previous consensus views has indeed been piling up, but that is not because of the hiatus. On the other hand, I agree that any claim that global warming has stopped is nonsense. As John says, a planetary radiative imbalance persists, as shown by ocean heat uptake data. However, the level of imbalance appears to be only about 0.5 W/m², and if anything to have declined slightly since the turn of the century.

I believe that the suggestion John refers to (in Schmidt et al, 2014), that reductions in total forcing (ERF) are driving the hiatus, is wide of the mark. That paper claims CMIP5 forcings, based on the historical estimates to 2000 or 2005 and representative concentration pathway (RCP) scenarios thereafter, have been biased high since 1998. The largest claimed bias is in volcanic forcing, which Schmidt et al say averaged -0.3 W/m² from over 2006–11, almost treble AR5’s best estimate, and nearly twice what their cited source seems to indicate. Their assumption that CMIP5 models all had zero volcanic forcing post 2000 is also dubious; the RCP forcings dataset has volcanic forcing averaging -0.13 /m² over that period. Their assumption that increases in nitrate aerosols affected aerosol forcing by -0.1 W/m² since the late 1990s has little support in the cited source. Their application of a multiplier of two to differences in estimated solar forcing has no support in AR5. My conclusion that the Schmidt et al study is biased and almost certainly wrong is supported by statements in Box 9.2 of AR5. It says there that over 1998–2011 the CMIP5 ensemble-mean ERF trend is actually slightly lower than the AR5 best-estimate ERF trend, and that “there are no apparent incorrect or missing global mean forcings in the CMIP5 models over the last 15 years that could explain the model–observations difference during the warming hiatus”.

I concur with John’s view that natural internal climate system variability has probably made a substantial contribution to the hiatus. But it probably made a significant contribution in the opposite direction to the fast warming over the previous quarter century, due principally to the Atlantic Multidecadal Oscillation then being in its warming phase.

CAM5 model

I am not surprised that the NCAR CESM1-CAM5 model matched global actual warming reasonably well from the 1920s until the early 2000s despite having a high ECS of 4.1°C and (according to AR5) a TCR of 2.3°C. The CESM1-CAM5.1 model’s aerosol forcing was diagnosed (Shindell et al, 2013) as strengthening by -0.7 W/m² more from 1850 to 2000 than per AR5’s best estimate. If the model’s other forcings were in line with AR5’s estimates, its increase in total ERF over 1850–2000 would have been only 64% of the AR5 best estimate. That much ERF change and a TCR of 2.3°C would have produced the same warming as a model with a TCR of 1.48°C in which ERF had changed in line with AR5’s best estimate.

As John says, the ensemble mean in his Figure 2 suggests that, due to forcing, certain decades are predisposed to a reduced rate of surface warming. But that is hardly surprising: decades having a major volcanic eruption near their start or end will tend to have respectively high or low trends, whereas others will tend to be in between. So, due to the 1991 Mount Pinatubo eruption, decades ending in the early 1990s show low trends whilst those ending around 2000 show high trends. Ensemble mean trends for decades ending in the last few years, whilst therefore lower than those for decades ending around 2000, are higher than for almost any other decades. I would challenge John’s view that 2010–2012 represented exceptional La Niña conditions. According to the MEI Index, it had only the 10th lowest 3-year index average since 1952. As 2012 had a positive index value, the average for 2010-11 is perhaps a fairer test. That had the 7th lowest 2-year average since 1951, still hardly exceptional: it was under half as negative as for 1955–56.

I endorse John’s call for well-understood, well-calibrated, global-scale observations of the energy and water cycles, but would emphasise the need for better observations of clouds and their interactions with aerosols. In my view, too much of the available resources were put into model development in the past and not enough into observations. Unfortunately, for many variables only a long record without gaps is adequate. The ARGO network has indeed greatly improved estimates of ocean heat content (OHC), but what a shame it has only been operating for a decade. Modern ocean “reanalysis” methods are no substitute for good observations. The modern ORAS4 reanalysis is clearly model-dominated in the pre-Argo period: the huge declines in 0-300 m and 0-700 m OHC shown in Balmaseda et al (2013) after the 1991 Mount Pinatubo eruption are absent in the observational datasets.

References

Balmaseda, M, K Trenberth and E Kallen, Distinctive climate signals in reanalysis of global ocean heat content. Geophys Res Lett, 40, 1–6, doi:10.1002/grl.50382

Cai, W et al, 2014. Increasing frequency of extreme El Niño events due to greenhouse warming. Nature Climate Change 4, 111–116

Forest, C.E., P.H. Stone, and A.P. Sokolov, 2008. Constraining climate model parameters fromobserved 20th century changes. Tellus, 60A, 911–920

Jewson, S., D. Rowlands and M. Allen, 2009: A new method for making objective probabilistic climate forecasts from numerical climate models based on Jeffreys’ Prior. arXiv:0908.4207v1 [physics.ao-ph].

Rogelj, Meinshausen, Sedlacek and Knutti, 2014. Implications of potentially lower climate sensitivity on climate projections and policy. Environ Res Lett 9 031003 (7pp)

Sherwood, SC, S Bony & J-L Dufresne, 2014. Spread in model climate sensitivity traced to atmospheric convective mixing. Nature 505, 37–42

Shindell, D. T. et al, 2013. Radiative forcing in the ACCMIP historical and future climate simulations. Atmos. Chem. Phys. 13, 2939–2974

First comments on the guest blog of Nic Lewis:

Nic Lewis appears to be arguing primarily on the basis that all work on climate sensitivity is wrong, except his own, and one other team who gets similar results. In reality, all research has limitations, uncertainties and assumptions built in. I certainly agree that estimates based primarily on energy balance considerations (as his are) are important and it’s a useful approach to take, but these estimates are not as unimpeachable or model-free as he claims. Rather, they are based on a highly simplified model that imperfectly represents the climate system.

For instance, one well-known limitation of such models that effective climate sensitivity is not truly a constant parameter of the earth system, but changes through time depending on the transient response to radiative forcing. This introduces an extra source of uncertainty (which is probably a negative bias) into estimates based on this approach.

I’m disappointed in this response. Lewis addressed your objections, particularly with regard to effective vs equilibrium climate sensitivity.

First comments on the guest blog of Nic Lewis:

I find the statistical approach promoted by Nic Lewis (and others preceding him) to be a compelling and potentially promising contribution in the effort to better understand and constrain climate sensitivity. The approach provides an elegant and powerful means for understanding the collective, gross-scale behavior of the climate system using a simple statistical framework, if implemented appropriately. However I also have reservations regarding the method in its current form. It has yet to be widely scrutinized in a physically realistic framework, has multiple untested assumptions, and is likely to have considerable sensitivity to a various details surrounding its implementation.

While I am optimistic that many of these issues can be addressed in future work, my confidence in the robustness of the sensitivity estimates and associated bounds of uncertainty currently promoted by Nic is low, given these issues. From my point of view, some of the key questions remaining to be addressed include:

• What is the method’s sensitivity to internal variability and uncertain forcings (and their combined direct / indirect effects and efficacy), particularly in situations in which their variability is not orthogonal?

• How long of a record is required to obtain a robust estimate of sensitivity? Is it asking too much of a purely statistical approach to distill the combined effects of uncertain and variable forcings from internal variability using a finite data record?

• In what contexts can instrumental estimates be viewed as more reliable than other estimates and in what situations are they particularly vulnerable to error?

• How can a more process-relevant statistical approach be developed that takes better advantage of the available data record? How do the various trade-offs between dataset uncertainty and relevance to the planetary imbalance, climate change, and feedbacks play out in such an effort?

While I could go into details addressing the many points made and studies cited by Nic in his post, in order to avoid repeating the points made in my original post and to promote a broader discussion without getting lost in the weeds, I think it might be useful to focus on a few key overarching issues on which there seems to be fundamental disagreement. From my perspective:

1) All estimates of climate sensitivity require a model. It is the complexity of the underlying model that varies across methods. Attempts to isolate the effects of CO2 on the temperature record are inherently an exercise in attribution and the use of a model is therefore unavoidable.

2) Given (1), it is a misnomer to present 20th Century instrumental approaches as being “observational estimates”. It is therefore also inappropriate to present them as being superior to other approaches based on such an assertion. Moreover, as discussed in my original post, the distinction between the approaches is somewhat contrived. In fact, GCM’s incorporate several orders of magnitude more observational information in their development and testing than do the typical “instrumental” approaches described by the editors (more on this below).

3) All methods have their weaknesses. While Nic has done a good job pointing out issues with other methods, he underestimates those in his own and in doing so is at odds with the originators of such techniques (e.g. Forster et al. 2013). Without a physical understanding of the climate system, based on robust observations of key processes, which can likely be promoted in instances by statistical approaches, there cannot be high confidence in climate projections. Statistical techniques, particularly when trained over a finite, complex, and uncertain data record in which forcings are also considerably uncertain, are no panacea to the fundamental challenge of physical uncertainty.

The good news, in my view, is that at least some of the questions I’ve posed above are readily testable and our understanding of a range of statistical approaches can be significantly improved in the near future. For instance, the NCAR Large Ensemble now provides the opportunity to apply assessments using Bayesian priors to a physical framework that has been demonstrated to be quite skillful in reproducing many of the observed modes of low frequency variability. The capability of such methods to estimate the known climate sensitivity of the CESM-CAM5 in the midst of realistic internal variability and temporally finite records is quantifiable. In fact, colleagues and I at NCAR are currently collaborating in an effort to do just this. Our initial perspective is that such methods are likely to be first-order sensitive to these effects and that uncertainty assessments such as that provided by Schwartz (2012) are probably much more reasonable than others claiming to provide a strong constraint on models. Our work is ongoing, and as such any definitive conclusion would be premature, but please, stay tuned.

In closing, it is only reasonable to welcome a broad array of approaches in assessing climate sensitivity. Yet, it is also clear that not all approaches have received equal scrutiny and that some perspectives on them have received even less scrutiny. Ultimately it is the thorough scrutiny of all models, whether complex or simple, and methods that will be instrumental in reducing uncertainty. The lure of doing so using purely statistical approaches is appealing, but in my view, is fool’s gold. In the early days of modeling, a time at which global observations of key fields were lacking, I would have advocated for the supremacy of such an approach over poorly constrained GCMs. Yet as I write this commentary, and as I work on a parallel effort to assess decadal variability in GCMs, I cannot help but be struck by a clear irony. The dataset I am using is the pioneering NOAA AVHRR OLR dataset, which completes its fourth decade of reporting next month, beginning in June of 1974. Despite its various blemishes, the achievement in constructing this record is both remarkable and unprecedented, and lessons learned have contributed to numerous follow-on efforts (e.g. CALIPSO, CERES, CLOUDSAT, ERBE, GPCP, GRACE, ISCCP, QUIKSCAT, SSM/I, TOPEX, TRMM, …). Given this era of such remarkable observations, accompanied by similar achievements across a realm of disciplines (e.g. ocean and atmospheric observations, operational models, reanalysis methods, supercomputing, …), I cannot help but be struck by the fact that there are those advocating for assessing climate solely with statistical approaches using simple models that capture little of the climate system’s physical complexity, trained on a limited subset of questionably relevant surface observations, and based on largely untested physical assumptions. It is an argument for which I find little support.

References:

Forster, P. M., T. Andrews, P. Good, J. M. Gregory, L. S. Jackson, and M. Zelinka (2013), Evaluating adjusted forcing and model spread for historical and future scenarios in the CMIP5 generation of climate models, J. Geophys. Res. Atmos., 118, 1139–1150, doi:10.1002/jgrd.50174.

Schwartz, S. E. (2012). Determination of Earth’s transient and equilibrium climate sensitivities from observations over the twentieth century: strong dependence on assumed forcing. Surveys in Geophysics, 33(3-4), 745-777.

Dear John, Nic and James,

I propose to start the discussion with the first question raised in our introduction:

What are the pros and cons of the different lines of evidence?

After studying your guest blogs and the first responses above I conclude there is a major difference in opinion on the pros and cons (and thus the importance or weight) of the first line of evidence, i.e. studies based on observations from the instrumental period that generally arrive at lower values of ECS.

Below I tried to summarize the pros and cons that I found in your contributions so far on this first line of evidence (the references can be found in the guest blogs). Mainly based on his pros, and the rejection of other lines of evidence, Nic arrives at a likely range for ECS that is much lower than reported by the IPCC: 1.2 – 3.0 and a best estimate of 1.7. According to James, the paleoclimate evidence provides ‘reasonable grounds for expecting a figure around to the IPCC canonical range’, which is 1.5 – 4.5, but he adds that ’the recent transient warming (combined with ocean heat uptake and our knowledge of climate forcings) points towards a “moderate” value for the ECS’ between 2.0 to 3.0. John made the point that ’the evidence accumulated in recent years’ justifies a lower bound of the likely range as in AR4, i.e. 2.0 instead of 1.5 in AR5. He did not provide a likely upper bound or a beste estimate yet.

Nic Lewis on observations from the instrumental period:

Pros

1. Anthropogenic signal has risen clear of the noise arising from internal variability and measurement/forcing uncertainty and therefore provide narrower ranges than those from other studies.

2. In a properly designed observationally-based study, the best estimate for ECS is completely determined by the actual observations, as is normal in scientific experiments. In any event, the ECS estimate will be far more closely related to observations than are GCM ECS values.

3. The only studies on observations from the instrumental period that should be regarded as both reliable and able to usefully constrain ECS are Aldrin (2012), Ring (2012), Lewis (2013) and Otto (2013), in accordance with the conclusions of AR5.

4. The robust ‘energy budget’ method of estimating ECS (and TCR) gives results in line with these studies.

5. Finding the appropriate “prior” is far more of a problem with a GCM than with a simple model, because of the much higher dimensionality of the parameter space.

6. Chapters 10 and 12 of AR5 WG1 share my view that higher confidence should be placed on studies based on warming over the instrumental period than on other observational approaches.

7. Annan is right that effective CS is slightly different from ECS, but these terms are largely used synonymously in AR5; Annan cites Armour (2013) but that is based on a GCM that has a latitudinal pattern of climate feedbacks very different from that of most GCMs.

8. Observational evidence is preferable to that from models, as understanding of various important climate processes and the ability to model them properly is currently limited.

Cons

1. Large uncertainty as to changes in total radiative forcing, resulting principally from uncertainty in aerosol forcing.

2. Lindzen & Choi (2011) and Murphy (2009) depend on short-term changes and are deprecated by AR5.

3. Studies using global mean temperature data to estimate aerosol forcing and ECS together are useless. Northern Hemisphere and Southern Hemisphere must be separated.

4. Observational studies with uniform priors greatly inflate the upper uncertainty bounds for ECS.

5. Observational studies using expert priors produce ECS estimates that reflect the prior, with the observational data having limited influence.

James Annan on observations from the instrumental period:

Pros

1. Global warming points to an ECS at the low end of the IPCC range due to better quality and quantity of data and better understanding of aerosol effects (Aldrin et al 2012, Ring et al 2012, Otto et al 2013).

2. Lewis’ estimates based primarily on energy balance considerations is a useful approach to take.

Cons

1. These studies assume an idealised low-dimensional and linear system in which the surface temperature can be adequately represented by global or perhaps hemispheric averages. In reality the transient pattern of warming (or the effective CS) is different from the equilibrium result, which complicates the relationship between observed and future (equilibrium) warming (Armour, 2014).

2. Lewis’ four preferred observational studies are not as unimpeachable or model-free as he claims but based on a highly simplified model that imperfectly represents the climate system.

3. Effective CS is not a constant parameter of the earth system, but changes through time depending on the transient response to radiative forcing. This introduces an extra source of uncertainty (which is probably a negative bias) into estimates based on Lewis’ approach.

John Fasullo on observations from the instrumental period:

No Pros given yet.

Cons

1. These studies are severely limited by the assumptions on which they’re based, the absence of a unique “correct” prior, and the sensitivity to uncertainties in observations and forcing (Trenberth 2013).

2. Uncertainty in observations and the need to disentangle the response of the system to CO2 from the convoluting influences of internal variability and responses to other forcings (aerosols, solar, etc) entails considerable uncertainty in ECS (Schwartz, 2012) and thus: 1) the use of a model is unavoidable, 2) it is a misnomer to present 20th Century instrumental approaches as being “observational estimates”.

3. Limited warming during the hiatus does not point at a low ECS but has been driven by the vertical redistribution of heat in the ocean, confirmed by persistence in the rate of thermal expansion since 1993 (Cazenave et al 2014).

4. Recent observations have reinforced the likelihood that the current hiatus is consistent with such simulated periods.

5. Attempts to isolate the effects of CO2 on the temperature record are inherently an exercise in attribution and the use of a model is therefore unavoidable.

6. Lewis underestimates the weaknesses and in doing so is at odds with the originators of this method (e.g. Forster et al. 2013).

7. Statistical techniques, particularly when trained over a finite, complex, and uncertain data record in which forcings are also considerably uncertain, are no panacea to the fundamental challenge of physical uncertainty.

8. Assessing ECS solely with statistical approaches using simple models that capture little of the climate system’s physical complexity, trained on a limited subset of questionably relevant surface observations, and based on largely untested physical assumptions is impossible.

I consider it very interesting to focus first on the second con of James and the related second con of John:

Lewis’ four preferred observationally-based studies are not as unimpeachable or model-free as he claims but based on a highly simplified model that imperfectly represents the climate system.

And:

Uncertainty in observations and the need to disentangle the response of the system to CO2 from the convoluting influences of internal variability and responses to other forcings (aerosols, solar, etc) entails considerable uncertainty in ECS (Schwartz, 2012) and thus: 1) the use of a model is unavoidable, 2) it is a misnomer to present 20th Century instrumental approaches as being “observational estimates”.

Lewis fully disagrees since he claims that:

In a properly designed observationally-based study, the best estimate for ECS is completely determined by the actual observations, as is normal in scientific experiments. In any event, the ECS estimate will be far more closely related to observations than are GCM ECS values.

I think it would be valuable to discuss this difference in opinion in more detail.

Bart has done an excellent job in summarising the issues, and in fact I’m not sure that I have a lot to add to my previous comments. I do think Nic Lewis over-states the case for the so-called “observational estimates” in a number of ways. Clearly, even these estimates rely on models of the climate system, which are so simple and linear (and thus certainly imperfect) that they may not be recognised as such.

Further issues arise with his methods, though in my opinion these are mostly issues of semantics and interpretation that do not substantially affect the numerical results. (For those who are interested in the details, his use of automatic approach based on Jeffreys prior has substantial problems at least in principle, though any reasonable subjective approach will generate similar answers in this case.) The claim that “observations alone” can ever be used to generate a useful probabilistic estimate is obviously seductive, but sadly incorrect. Thus, his results are not the peerless answer that he claims.

Nevertheless, they are a useful indication of the value of the equilibrium sensitivity, and I would agree that these approaches tend to be the most reliable in that the underlying assumptions (and input data) are generally quite good. A caveat arising from very recent research is the matter of forcing efficacy raised by Shindell and explored by Kummer and Dessler. I would like to see this new literature reconciled with previous research, especially that relating to detection and attribution, which already implicitly includes an (a priori unknown) efficacy factor in its estimation methods – and which, I believe, generally reaches contrary conclusions.

A quick response to James Annan’s recent comment.

First, I agree that observationally-based climate sensitivity estimates also involve use of climate models. I said so in my guest blog. I did not claim that observations alone can be used to generate a useful estimate of ECS. But, unlike estimates based directly on GCMs, or on constraining GCMs, observationally-based ECS estimates do not generally depend to first order on the ECS values of the climate models involved.

Second, just to clarify, my point summarised by Bart as “In a properly designed observationally-based study, the best estimate for ECS is completely determined by the actual observations” relates to the ECS estimation once the details of the method and the model used have been fixed.

I’m not sure exactly what James refers to when he writes “his results are not the peerless answer that he claims”, but if it is to the results in my objective Bayesian 2013 Journal of Climate paper (available here then I do not claim that they are perfect. (They are in a sense peerless, but only in that everyone else carrying out explicitly Bayesian multidimensional climate sensitivity studies seems to have used a subjective approach.)

I agree that Bart’s summary of the issues is excellent and am glad that we have broad agreement that the use of some model is intrinsic to all approaches to estimate ECS. The quality of the estimate thus hinges critically on the quality of the model. Like Bart, I also find this perspective to be at odds with the statement that observational-based estimates are “completely determined by the actual observations”.

I would also like to add that I do find “pros” for approaches attempting to estimate ECS from the observational record, per my comments on Nic’s piece. I genuinely do think that they have the potential to play an important role in constraining ECS once their strengths and weaknesses are broadly understood. I also suggest a means for doing so – namely exploring such methods in a framework that is tightly constrained. As mentioned, using a model whose sensitivity is known and whose variability is thoroughly vetted provides such an opportunity. A model ensemble can be generated to encompass the full range of uncertainty arising from forcing (including a consideration of direct/indirect effects and efficacy) and internal variability, and these methods can be applied over records of varying length and phases of internal modes to evaluate their robustness. To my knowledge, such an examination has yet to be done. Am I perhaps overlooking one? As such, I see no solid basis for rejecting an approximate range for ECS of 2.0 to 4.5 with a best estimate of about 3.4. It is noteworthy as well that an additional “pro” of these methods, once they are understood, is that they hold the promise of saving the countless CPU-hours of computation involved in estimating ECS from a fully coupled simulation (as a complement to the Gregory method).

Lastly, I would like to reiterate my position that I do not believe any method for estimating ECS should be rejected outright. The challenge as I see it is how to understand the apparent divergence in results provided by each in terms of their respective strengths and weaknesses. From my point of view, Shindell (2014) and Kummer and Dessler (2014) provide a viable rationale for reconciling such disagreements. Is there any basis for rejecting them outright?

Hello everybody. My usual nick is: Antonio (AKA “Un físico”) but from now on and in here I will use the nick “Antonio AKA Un fisico”. Well, going to the point of this post. After my analysis in: https://docs.google.com/file/d/0B4r_7eooq1u2TWRnRVhwSnNLc0k

(see subsection 3.1, pgs. 6&7) anyone can easily conclude that IPCC’s ECS is an invented value: that it is science fiction.

Nic Lewis is wrong when he says: “Regarding paleoclimate study ECS estimates, I concur with the conclusions reached in AR5. So, overall, this line of evidence indicates that there is only about a 10% probability of ECS being below 1°C and a 10% chance of it being above 6°C”.

So let’s dialogue about IPCC’s paleoclimate stimations of ECS. Please Nic, read my pg.7. Spetially the paragraph: “Error bars (see, for example, WGI AR5 Figure 5.2 {p.395 (411/1552)}) tend to grow as we move to the past; spanning not only in the vertical axis (in CO2 RF or GST), but in the time axis. Thus, reconstructing CO2 RF, or GST, vs. time: becomes a highly inaccurate issue”.

So Nic, now that you understand my view, please demonstrate to all of us why you agree with IPCC: why is there only about a 10% probability of ECS being below 1°C?. [you cannot expect Lewis to go through your document now; make your comment more on topic]

This is a very interesting discussion. Here’s how I think about the low end of the climate sensitivity range. Doubling carbon dioxide by itself gives you about 1.2°C of warming. Add in the water vapor and lapse-rate feedbacks, which we have pretty high confidence in, and you get close to 2°C. Then add in the ice-albedo feedback and you get into the low 2s. To get back down to 1.5-ish, the cloud feedback needs to be large and negative. Is that possible? Yes, but essentially none of the evidence supports that. Instead, most evidence suggests a small positive cloud feedback, which would push the ECS to closer to 3°C. There are nuances to how to interpret this, of course, (e.g., these were derived from inter annual variations, not long-term climate change) but I find these estimates, all based on observations, to be pretty convincing.

As far as the ECS calculations based on the 20th-century observational record go, I think they’re useful and interesting, but I have less confidence in them. What’s particularly troubling to me is that we have no observations of forcing — it is an entirely model-generated parameter. If there is a single most troubling weakness in any of the calculations, to me that is it. Thus, I put most of my confidence in the bottom-up estimate described in the last paragraph and conclude that the climate sensitivity is going to be above 2°C.

If you ask what evidence would convince me that the ECS was 1.5°C, it would be evidence of a negative feedback that could cancel the known positive ones.

FYI, I make this argument in this YouTube video: http://www.youtube.com/watch?v=mdoln7hGZYk

Thanks, Andy Dessler

Dear James, John and Nic,

Thanks for your last comments.

@James: You indicate Nic seriously underestimates the uncertainties in observational based studies (i.e. they use a far too simple ‘model’), but at the same time you say ’these approaches tend to be the most reliable’. I interpret this as you partly agree with Nic that these approaches should be weighted stronger than studies based on other lines of evidence. I guess that is also why you arrive at a range for ECS (i.e. 2.0 – 3.0) in the lower part of the IPCC range. Am I right? And if so do you consider this range of 2.0 – 3.0 as a likely range or a very likely range?

@Nic: you write ‘I did not claim that observations alone can be used to generate a useful estimate of ECS.’ To be honest, as far as I have read your contributions I do think you did such a claim, but maybe I have interpreted them incorrectly. Could you explain what else is needed to generate an estimate for ECS?

Regarding your second point, what does it exactly imply? It is obvious to me that if a model and a method have been fixed, than ECS is completely determined. But the same holds for the other models and methods used in the other lines of evidence. Does it not?

Finally, Andy Dessler indicates that particulary troubling is the fact that there are no observations of forcing (and thus introducing a large uncertainty in the ‘energy budget’ method) especially due to the uncertainties in aerosols. What is your reply on that, Nic?

@John: you indicate that observational based studies could have an important role in constraining ECS if observations would be used in combination with GCMs. Then you come up with a range for ECS of 2.0 to 4.5 and a best estimate of about 3.4. Could you say a bit more how you arrive at these numbers? (Especially the relatively high best estimate).

You mention Shindell(2014) and Kummer and Dessler (2014) as a possible explaination for the difference between studies based on the instrumental period and the ones based on other lines of evidence. Could you indicate in a few sentences how these studies close the gap? (And in what direction?)

Finally, in a public comment, Andy Dessler adds that a (strong) negative cloud feedback is needed to get an ECS as low as suggested by Nic. However, current studies suggest the opposite, he says. However, in his guest blog Nic writes that: ‘observational evidence for cloud feedback being positive rather than negative is lacking’. This is a remarkable contradiction that needs some clarification, I would say. Especially because Nic also writes that Global Circulation Models (GCMs) have too high ECS values (i.e. over 2°C) due to positive cloud feedbacks and adjustments.

Looking forward to your responses.

In reply to Andrew Dessler:

I think that your argument regarding sensitivity is broadly reasonably and indeed we used it as a basis for a vague prior in our 2006 paper, but I don’t think such an argument from ignorance (ie, we don’t know much cloud feedback) can really be used as a confident estimate. Assuming the comment about forcing being model-generated refers primarily to anthropogenic aerosols, I’d be interested to hear how your calculations work out when applied to the last 30 years when by common consent, the change in aerosol forcing has been fairly modest.

I agree that Bart has made a good summary. I will attempt here to address his second question.

Let me start by saying that I reciprocate Johns views: I do find “pros” for approaches attempting to estimate ECS from the complex numerical climate models and studies of feedbacks represented in them as well as from the observational record. I think that such approaches may offer the most accurate way of constraining ECS once they are known to represent all significant climate system processes sufficiently accurately. In the meantime, they complex climate models play many other important roles – not least in helping gain a better physically-based understanding of the workings of the climate system. I agree that very simple statistical models cannot provide much help gaining such understanding, even if they currently offer the most robust way of estimating ECS.

In his piece, John discussed simulations by the NCAR CCSM4 model in some detail. One of my well informed contacts in the UK climate modelling community told me that the NCAR model was one of only three CMIP5 models in the world that they considered to be good. But, as Figure 5 in my guest blog shows, over the 25 year period 1988-2012 it simulated four times faster warming in the important tropical troposphere than the average of the two satellite-observation based datasets (UAH and RSS), and five times faster than the ERA-Interim reanalysis (which I understand is thought to be the best of the reanalysis datasets).

As shown in my Figure 4, CCSM4 also simulated global surface warming over the 35 year period 1979-2013 more than 50% higher than HadCRUT4 – and than either of the other two main observational datasets. Moreover, 1979–2013 is a period in which natural internal variability seems to have had a positive influence on global temperatures. That is due to the Atlantic Multidecadal Oscillation (AMO) having moved from near the bottom to near the top of its range over that period, according to NOAA’s AMO index (a slightly smoothed version of which is available here : the black line in panel a). Over the 64 year period 1950-2013, which started and ended with the AMO index at much the same level, CCSM4’s trend in simulated global surface temperature was nearly 85% higher than per HadCRUT4.

IMO, no sensible scientist would place his faith in the sensitivity of a model that has performed like this being anywhere near correct, or indeed view the model itself as satisfactorily representing the real climate system.

I agree with John’s comment that the quality of an ECS estimate depends on the quality of the model (as well as of the observations). But quality, in this context, means how accurately the model translates the observations into an estimate that correctly reflects the information the observation provides about ECS. A simple statistical model may in this context be much higher quality than a sophisticated method based on a state-of-the-art coupled GCM. This perspective is not at odds with my statement that sound observationally-based estimates are “completely determined by the actual observations”. My next sentence read: “To the extent that the model, simple or complex, used to relate those observations to ECS is inaccurate, or the observations themselves are, then so will the ECS estimate be.”

Consider estimating a distance on the ground by measuring on a map. The estimate will entirely depend on the measurement made, but will be inaccurate if the map is poor and/or if the wrong scale factor is used. The point I was making is that ECS values of GCM are not completely determined by the observations, even though model development is informed by observations. Nor are ECS values so determined where they are estimated by methods that are unable – typically because of climate model limitations or use of expert priors – properly to sample the entire range of values of ECS and other parameters being estimated alongside it, ignoring any part ruled out by the observations.

I would like to respond to John’s suggestion of exploring methods to estimate ECS from the observational record in a framework that is tightly constrained, using a model whose sensitivity is known and whose variability is thoroughly vetted. I concur, although depending on the approach used the realism of variability in the model with known sensitivity may not matter.

One approach is to use a detection and attribution method, comparing model simulations and observations for some “fingerprint” of the forcing of interest and using regression to find the best scaling factor. This provides estimates of TCR more readily than of ECS. If the scaling factor is for the response to greenhouse gases (GHG) over the last 60 or more years of the instrumental period, multiplying the scaling factor by the model’s TCR provides an observationally-based estimate of TCR. Figure 10.4 of AR5, panel (b), shows the GHG scaling factors (green bars) estimated by three such studies. The corresponding observationally-based TCR estimates for the nine CMIP5 GCMs studied in Gillett et al (2013), which uses the longest data time series, have a median of 1.45°C, close to median estimates of ~1.35°C that I have derived using simple energy balance approaches. A problem with such studies is difficulty in obtaining a complete separation of responses to different forcings. Incomplete separation between responses to GHG and aerosol forcing may lead to overestimation of the GHG scaling coefficient and hence of TCR.

An approach that avoids the separation problem is to systematically vary parameters of a climate model, varying the model physics so as to achieve a large number of different combinations of ECS, ocean vertical diffusivity (or other measure of ocean heat uptake efficiency), aerosol forcing and any other key climate system properties, and performing simulations for each – so-called PPE studies. Obviously, those properties must be calibrated in relation to the model parameters. The simulation results are then compared with observations and the best fit found. The fidelity of model variability is normally not critical since its effects are suppressed by using averages over ensembles of simulations. Real-world variability and covariability is then estimated from one or more separate much longer simulation runs, not necessarily by the same model, and appropriately allowed for.

This PPE approach can be used with full scale coupled GCMs, but the extensive supercomputer time required is very expensive. More seriously, it may prove impracticable to explore all combinations of climate system properties that are compatible with the observations. That was the problem with the Sexton et al (2012) and Harris et al (2013) PPE studies, as explained in my guest blog. often used In the idea is to minimise the effects of model variability by using ensembles of simulations, real-world variability then being allowed for using simulations by a more complex model.

It is more usual to use a PPE approach with models that are simpler than a GCM, typically resolving the globe horizontally only by hemisphere and land vs ocean, and maybe having a single layer atmosphere. However, several such studies have been carried out using the MIT climate model, which is in effect a 2D GCM, key parameter settings of which have been calibrated against 3D coupled GCMs. Longitude, which is not resolved, is generally far less important than latitude. Such studies include Forest et al (2002 and 2006), Lewis (2013) and Libardoni & Forest (2011, corrigendum 2013). Internal covariability was allowed for by the use of long control run simulations from full coupled GCMs, and multiple observations were used to constrain ECS, aerosol forcing and ocean effective diffusivity. Are such studies in principle more acceptable to John than observationally-based estimates using simpler numerical climate models or simple mathematical/statistical models?

Bart,

OK, you’ve put me on the spot. I was deliberately a little vague in my initial estimate, because I had not done any detailed calculations recently and there’s been a lot of new literature in the last couple of years. I do think that my range of 2-3C could be considered “likely”, bearing in mind that this still leaves a substantial probability (33%) of a value outside that range.

I don’t really like the term “weighting” as it might be interpreted as taking some sort of weighted average, which I don’t think is really appropriate. But yes, I do consider the transient 20th century warming-based estimates more trustworthy than other approaches, as they are more-or-less directly based on the long-term (albeit transient) response of the climate system to anthropogenic forcing, which is after all what we are interested in here!

Hi Bart,

Thanks for the additional questions. Firstly to clarify my position, I have indicated that so-called instrumental-record studies could play an important role in the discussion if they were more thoroughly vetted and understood. One need not use a GCM at all, though that approach could provide a useful well-constrained framework for such a vetting. The fact that this has not yet been done in any thorough sense to me is startling, given the sweeping statements that have been made based on such techniques and given how widely scrutinized other approaches (e.g. GCMs) have been.

My basis for the lower part of my estimated range is very much in line with Andy’s comments based on feedbacks – an approach I focus on in my original post. I know of no valid studies supporting the strong negative cloud feedback needed to arrive at a sensitivity well below 2C. I know of several claiming to show such a negative feedback that have been revealed (by myself and others) to clearly be wrong (Lindzen and Choi, Spencer and Braswell, among others). Lindzen himself has admitted to major errors in this work (http://dotearth.blogs.nytimes.com/2010/01/08/a-rebuttal-to-a-cool-climate-paper/?pagemode=print). From other recent work, (multiple works each by Soden, Webb, Romanski/Rossow, Sherwood, Brient/Bony, Gregory, Gettelman, Dessler, Jonko, Norris, Sanderson, Shell, Bender, Vechhi, Lauer …) that examine the issue across observations, cloud resolving models, and GCM archives of various sorts, there is persuasive evidence that the feedback is not strongly negative but rather is likely to be positive, perhaps strongly so. Clearly there remains a considerable range of uncertainty on the exact value of the feedback but in my view the evidence does not allow for a strong negative feedback. And so how does one construct a physical basis for a value well below 2?

My upper end of the range is based on my evaluation of models and related work in the literature (e.g. by many of the above mentioned authors). For instance, in my view, the CESM1-CAM5 ensemble that I present in my Fig. 2 shows no obvious bias in its reproduction of the surface temperature record yet its sensitivity is 4.1! Again, the main disparity between the observed record and the ensemble mean occurs during the hiatus, yet this does not accompany any reduction in the planetary imbalance (in nature or comparable model ensemble members) and therefore is not evidence for a strong negative feedback. It is therefore also not an indication of biases in model feedbacks and is not a basis for revising our sensitivity estimates downward. Moreover, the key processes that drive sensitivity are actually better represented in many of the high sensitivity models (Fasullo and Trenberth 2012, Sherwood et al. 2014) and the sensitivities of the poorest performing models in CMIP3 (e.g. 2.1 of NCAR PCM1 which we know has major problems) have not been reproduced by models in CMIP5, as a broader improvement (though not perfection) of key processes has been realized.

Regarding the work on efficacy, I’ll let texts from the abstracts do the talking, paraphrasing where useful.

Shindell: …transient climate sensitivity to historical aerosols and ozone is substantially greater than the transient climate sensitivity to CO2. This enhanced sensitivity is primarily caused by more of the forcing being located at Northern Hemisphere middle to high latitudes where it triggers more rapid land responses and stronger feedbacks. I find that accounting for this enhancement largely reconciles the {instrumental and GCM ranges}.

Kummer and Dessler: Previous estimates of ECS based on 20th-century observations have assumed that the efficacy is unity, which in our study yields an ECS of 2.3 K (5%-95%- confidence range of 1.6-4.1 K), near the bottom of the IPCC’s likely range of 1.5- 4.5 K. Increasing the aerosol and ozone efficacy to 1.33 increases the ECS to 3.0 K (1.9-6.8 K), a value in excellent agreement with other estimates. Forcing efficacy therefore provides a way to bridge the gap between the different estimates of ECS.

John comments that from his point of view, Shindell (2014) and Kummer and Dessler (2014) provide a viable rationale for reconciling disagreements between different methods of estimating ECS, and asks if there is any basis for rejecting them outright. The answer to that question is yes in relation to Kummer & Dessler (2014), and to a very large extent in relation to Shindell 2014.

To avoid a very lengthy comment, I will just address Kummer & Dessler (2014) here. It is titled “The impact of forcing efficacy on the equilibrium climate sensitivity” and states that ‘Recently, Shindell [2014] analyzed transient model simulations to show that the combined ozone and aerosol efficacy is about 1.5.’ Kummer & Dessler estimate ECS using an energy balance method, as per Equation (1) in my blog, based on a forcing estimate with ozone and aerosol forcing either unscaled (giving an ECS best estimate of 2.3°C) or, following Shindell (2014) scaled up by an efficacy of 1.5 or 1.33 (giving best estimates for ECS of respectively 3.0°C or 3.5°C). I am afraid that there are several problems with their paper.

First, what Shindell actually discusses is transient sensitivity to inhomogeneous aerosol and ozone forcings being higher than to homogeneous CO₂ forcing. He never claims that these inhomogeneous forcings have a efficacy of greater than one. He never refers to efficacy at all in his paper or its Supplementary Information.

The efficacy of a forcing agent is the surface temperature response to radiative forcing from that agent relative to the response from carbon dioxide forcing. Studies of the efficacy of aerosol forcing (including by Hansen and by Shindell) have typically found a value close to one. As AR5 says, by including many of the rapid adjustments that differ across forcing agents, the effective radiative forcing (ERF) concept it uses – which is generally also used in energy budget ECS estimates – in any case includes much of their relative efficacy. Shindell’s claim isn’t that inhomogeneous forcings (mainly aerosol) have a high efficacy, but that they are concentrated in regions of high transient sensitivity, thereby having more effect on global surface temperature than if they were uniformly distributed.

Presumably as a result of Kummer & Dessler confusing forcing efficacy with transient climate sensitivity, their calculations make no physical sense. Their method appears to hugely over-adjust for the effects on ECS estimation of the higher transient sensitivity to aerosol and ozone forcings that Shindell (2014) estimates. Troy Masters has an excellent blog explaining this problem here.

Secondly, Kummer & Dessler state that their forcing time series is referenced to the late 19th century and accordingly use a reference (base) period to measure changes in global surface temperature from of 1880-1900. That would be fine were it true, but it is not. Their forcing time series actually come from AR5 and are referenced to 1750. The mean total forcing during 1880-1900 was substantially negative relative to 1750 due to high volcanic activity. Referencing the forcing change to a base period of 1880-1900, as necessary to match their temperature change, reduces their non-efficacy-adjusted ECS estimate to 1.5°C. And their headline 3.0°C best ECS estimate, based on an aerosol and ozone ‘efficacy’ of 1.33 and their faulty adjustment method, become 1.7°C.

There are other issues with the paper, but I will leave it at that. I’ve probably already upset Andrew Dessler quite enough!

To James Annan:

Overall, I think I agree with James’ comments — I wish my argument were stronger. However, to be fair, it’s important to realize that there are no really strong arguments for any particular climate sensitivity range — if there were, we wouldn’t be having this argument. Rather, any argument about climate sensitivity requires you to evaluate conflicting arguments and decide one is right and the other isn’t. So while I think that ECS > 2°C, I understand the IPCC authors who decided the ECS > 1.5°C.

To Nic Lewis:

I appreciate your comments. Your statement about the referencing period of the forcing is correct and that will be corrected in the galleys. Assuming that the climate in the late 19th century is warmer than that in the mid 18th century (probable since radiative forcing is +0.3 W/m2 in the late 19th century), then referencing both time series to 1750 will increase the calculated climate sensitivity (I can explain why if it’s not clear). Thus, it does not affect our conclusion that incorporating efficacy has a significant effect on the inferred climate sensitivity.

I also agree that there is a useful clarification to be made between Shindell’s analysis and ours. The efficacy in Shindell’s analysis is a combination of a heat-capacity effect and an effect from differing climate sensitivities to aerosols/ozone and greenhouse gases. The effect due to differing heat capacities is not relevant for the ECS, but the other one is. Given the weaker radiative restoring force at high latitudes, I find it perfectly reasonable that there is a significant difference in sensitivity to these different forcers — and if there is, it resolves an otherwise confusing situation. As we say in the paper, determining this is should be a priority.

To John Fasullo:

I agree with just about everything you say!

Nic Lewis here exhibits a decidedly un-self-critical attitude in his comments here – I don’t think this reflects well on his arguments, or for the likelihood of any resolution of this “dialogue”. To move forward it is essential to recognize merits in opposing views, in fact to try to acknowledge the best arguments the “other side” may have. Both John Fasullo and James Annan do this in their comments, describing Lewis’ and similar instrumental-based approaches in very fair terms, with considerable praise for their good points. But Lewis insists on an extremely biased presentation. As just one very clear example to me, he seems to acknowledge none of the previous debate that occurred in this forum on the tropical hot spot – citing conflict between models and “observations” on mid-troposphere warming as an indictment of the models, when in fact the measured trends are clearly still very uncertain. Lewis asserts several similar claims that fall down if proper uncertainty measures are applied.

A little more honest self-criticism would be a huge help here. And addressing what seem to be contradictions in what’s been raised already, for example the one Bart Strengers pointed out, is also important.

Bart queries my comment that ‘I did not claim that observations alone can be used to generate a useful estimate of ECS.’ and asks what else is needed to generate a estimate for ECS. I think this is an issue of terminology. When I refer to observational estimates, I do not imply that a sound ECS estimate can be derived from observations alone. As I wrote in my guest blog, ‘Whichever method is employed, GCMs or similar models have to be used to help estimate most radiative forcings and their efficacy, the characteristics of internal climate variability and maybe other ancillary items.’

Let me take the example of an energy budget estimate of ECS, using Equation (1) in my guest blog. The change in global surface temperature is typically taken from a dataset that involves multiple measurement time series at different locations and a more or less sophisticated mathematical method of averaging the measurement, adjusting for inhomogeneities, etc. That method could be regarded as a model, but not in the normal sense of the word. Most people would view HadCRUT and other global temperature estimates as observational data, not model outputs. Planetary heat uptake / radiative imbalance in the final period can be calculated in similar ways. These may involving rather more processing and adjustments, but the outcome is still generally regarded as observational data.

On the other hand, it is generally necessary to rely on coupled GCM simulations to derive heat uptake in the base period, since that is typically in the second half of the nineteenth century, before proper observations of ocean temperatures at depth started. That estimate will have a first order dependence on the GCM’s ECS value. However, the absolute value of heat uptake in the nineteenth century is small, so it has only modest effect on ECS estimation, and an approximate adjustment can be made to the GCM’s simulated value by reference to the relationship of the GCM’s ECS to the energy budget ECS estimate.